The fact that libuv runs the poll phase in the middle of phase execution, instead of at the beginning, has to do with needing to handle its other APIs.

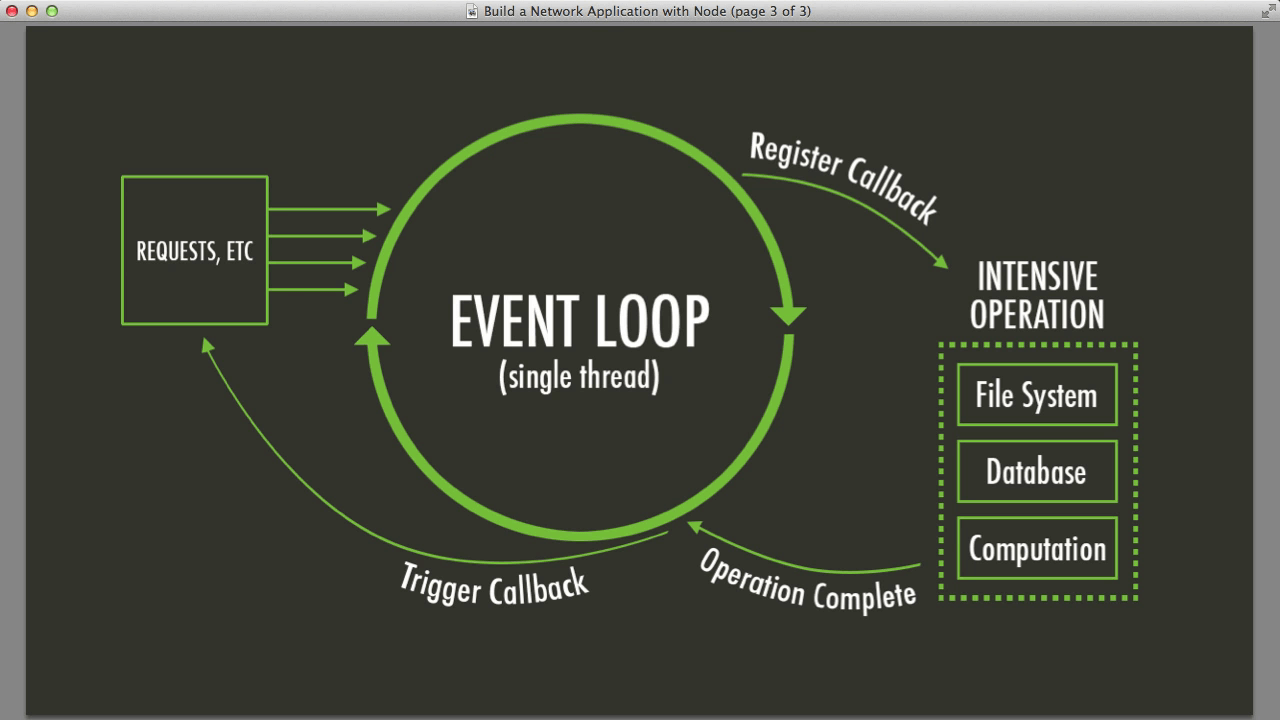

Now that we have had a brief review, it is time to put that information out of our minds. The following is a diagram about the event loop from the official Node.js docs (which is a variation of a diagram I created for a blog post back in 2015) about the order of execution for each of libuv's phases: By the end of this post, I hope to have explained adequately about the ELU and how event loops work in general to give you the confidence to interpret the resulting metrics. It sounds simple enough, but some subtlety can be easily missed. The simplest definition of event loop utilization (or ELU) is the ratio of time the event loop is not idling in the event provider to the total time the event loop is running. One of those is now known as event loop utilization. Second, a few of the lowest level metrics showed surprising patterns that revealed how the application was performing. Either because there was too much noise or because the information could be replicated through other metrics. First, most of these metrics didn't give additional insight. For example, a few of these are counting the number of events processed, timing measurements for every phase and subphase of the event loop, and tracking the amount of data written and read from streams. Initially, I added measurements for over 30 additional metrics to libuv and Node.

To prevent further confusion, at the end of this post are proper definitions of event loop related terms. Eventually, I plan to write about all aspects of my research, but today we will focus on a metric that has already been added to Node.Ī quick note before we continue: Terms are inconsistently thrown around to define parts of the event loop, such as the "event loop tick" that can either refer to the next event loop iteration, the next event loop phase, or a "sub-phase" of the event loop that is processed before the stack has completely exited. I've run a few hundred hours of benchmarks and collected over one million data points to make sure my analysis was correct. The goal of this was to indirectly infer the state of the application without introducing measurable overhead. In the last year, I've spent many hours writing patches for libuv and Node to collect new metrics.

0 kommentar(er)

0 kommentar(er)